What You’ll Learn Here

Python provides two libraries, Requests and Beautiful Soup, to help you delete websites more easily. By using Python’s Requests and Beautiful Soup together, you can retrieve the HTML content of a website and then parse it to extract the data you need. In this article, I will show you how to use these libraries with an example.

If you are new to the concept and have a moderate level of experience with Python, you can check out the Python developer course on Hyperskill, to which I contribute as an expert.

By the end of this guide, you will be equipped to create your own Web Scraper and will have a deeper understanding of how to work with large amounts of data and how to apply it to make data-driven decisions.

Please note that while a web scraper is a useful tool, be sure to follow all legal guidelines. This includes respecting the website’s robots.txt file and adhering to the terms of service to prevent unauthorized data extraction.

Also, before scraping, make sure that the scraping process does not damage the functionality of the website or overload its servers. Finally, respect data privacy by not collecting personal or sensitive information without proper consent.

How Beautiful Soup And Python Requests Work Together

Let’s understand the role of each library.

The Python requests library is responsible for retrieving the HTML content from the URL you provide in the script. Once the content is retrieved, it stores the data in a response object.

Beautiful Soup then takes over, transforms the raw HTML of the Requests response into a structured format, and parses it. You can then extract data from the parsed HTML by specifying attributes, allowing you to automate the collection of specific data from websites or repositories.

But this duo has its limits. The requests library cannot handle websites with dynamic JavaScript content. Therefore, you should mainly use it for sites that serve static content from servers. If you need to delete a dynamically loaded site, you will need to use more advanced automation tools like Selenium.

How To Shape A Web Scraper With Python SDK

Now that we understand what Beautiful Soup and Python Requests can do, let’s see how we can recover data using these tools.

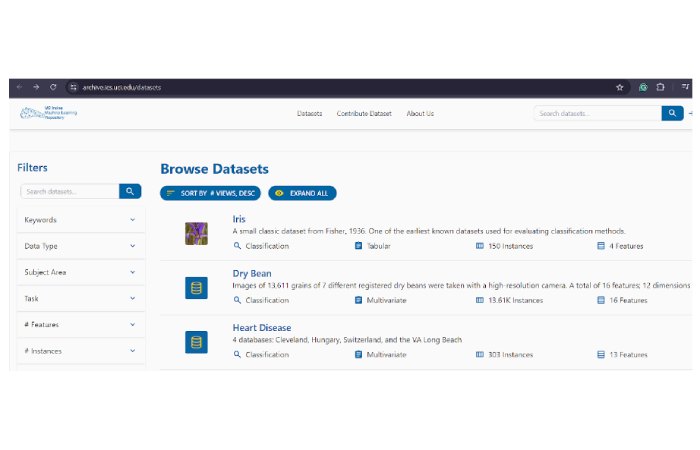

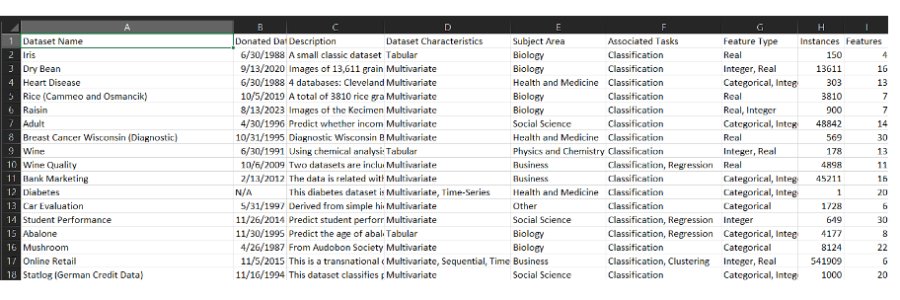

In the following example, we will retrieve data from the UC Irvine machine learning repository.

As you can see, it contains many data sets and you can find more details about each data set by going to a page dedicated to the data set. You can access the devoted page by clicking on the data set name in the list above.

See the image below to get an idea of the information provided for each data set.

The code we write below will go through each data set, retrieve the details, and save them to a CSV file.

Step 1: Import Necessary Libraries

First, import the necessary libraries: queries to make HTTP requests, BeautifulSoup to parse HTML content, and CSV to save data.

Step 2: Define the Base URL and CSV Headers

Set the base URL for the dataset lists and set the headers of the CSV file where the recovered data will be saved.

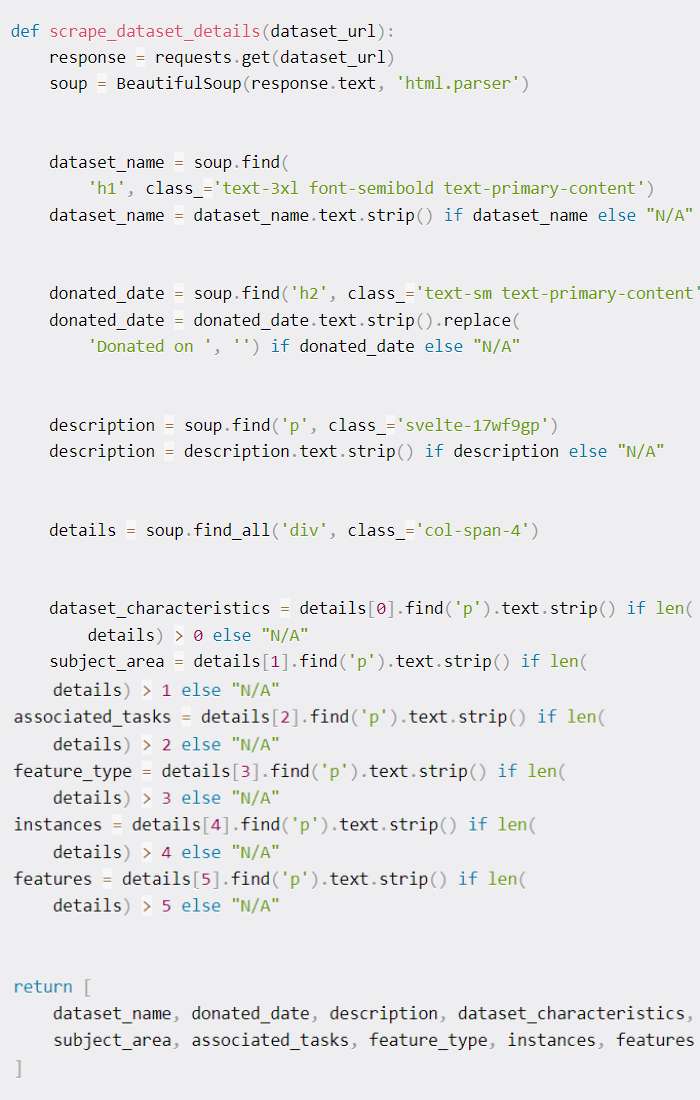

Step 3: Create a Function to Scrape Dataset Details

Define a scrape_dataset_details function that takes the URL of an individual dataset page, retrieves the HTML content, parses it using BeautifulSoup, and extracts the relevant information.

The scrape_dataset_details function retrieves the HTML content of a dataset page and parses it using BeautifulSoup. Extracts information by targeting specific HTML elements based on their tags and classes, such as data set names, donation dates, and descriptions.

The function uses methods such as find and find_all to locate these elements and retrieve their textual content, handling cases where elements may be missing by providing default values.

This systematic approach ensures that relevant details are accurately captured and returned in a structured format.

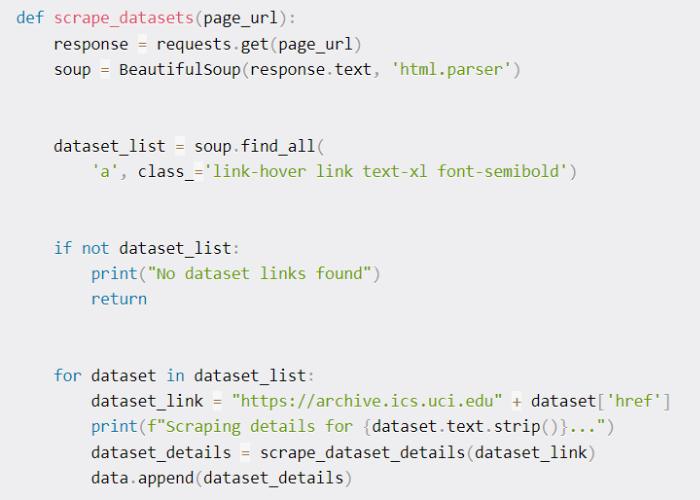

Step 4: Create a Function to Scrape Dataset Listings

Define a scrape_datasets function that takes the URL of a page listing multiple datasets, retrieves the HTML content, and finds all links to the dataset. For each link, call scrape_dataset_details to get detailed information.

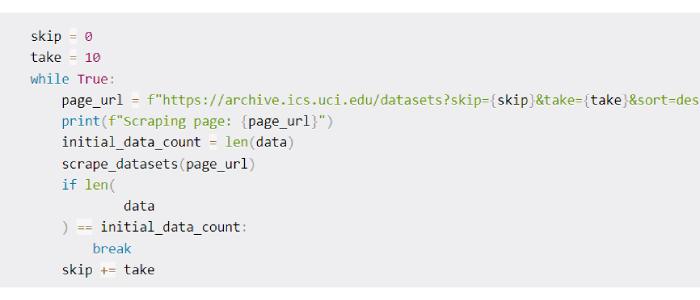

Step 5: Loop Through Pages Using Pagination Parameters

Implement a loop to navigate pages using pagination settings. The loop continues until no new data is added, indicating that all pages have been removed.

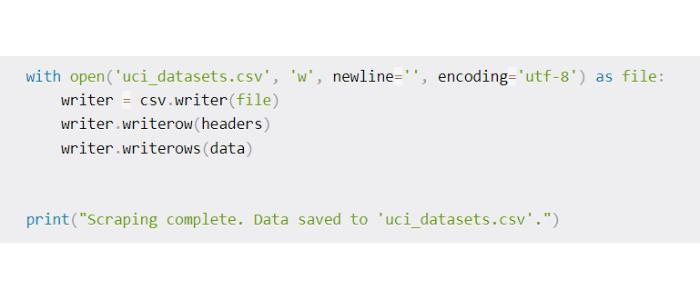

Step 6: Save the Scraped Data to a CSV File

After scraping all the data, save it to a CSV file.

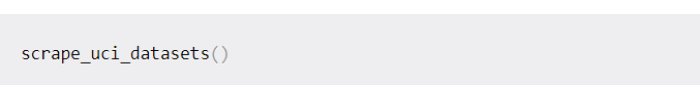

Step 7: Run the Scraping Function

Finally, call the scrape_uci_datasets function to start the scraping process.

Full Code

Here is the complete code for the web scraper:

import requests

from bs4 import BeautifulSoup

import csv

def scrape_uci_datasets():

base_url = “https://archive.ics.uci.edu/datasets”

headers = [

“Dataset Name”, “Donated Date”, “Description”,

“Dataset Characteristics”, “Subject Area”, “Associated Tasks”,

“Feature Type”, “Instances”, “Features”

]

# List to store the scraped data

data = []

def scrape_dataset_details(dataset_url):

response = requests.get(dataset_url)

soup = BeautifulSoup(response.text, ‘html.parser’)

dataset_name = soup.find(

‘h1′, class_=’text-3xl font-semibold text-primary-content’)

dataset_name = dataset_name.text.strip() if dataset_name else “N/A”

donated_date = soup.find(‘h2′, class_=’text-sm text-primary-content’)

donated_date = donated_date.text.strip().replace(

‘Donated on ‘, ”) if donated_date else “N/A”

description = soup.find(‘p’, class_=’svelte-17wf9gp’)

description = description.text.strip() if description else “N/A”

details = soup.find_all(‘div’, class_=’col-span-4′)

dataset_characteristics = details[0].find(‘p’).text.strip() if len(

details) > 0 else “N/A”

subject_area = details[1].find(‘p’).text.strip() if len(

details) > 1 else “N/A”

associated_tasks = details[2].find(‘p’).text.strip() if len(

details) > 2 else “N/A”

feature_type = details[3].find(‘p’).text.strip() if len(

details) > 3 else “N/A”

instances = details[4].find(‘p’).text.strip() if len(

details) > 4 else “N/A”

features = details[5].find(‘p’).text.strip() if len(

details) > 5 else “N/A”

return [

dataset_name, donated_date, description, dataset_characteristics,

subject_area, associated_tasks, feature_type, instances, features

]

def scrape_datasets(page_url):

response = requests.get(page_url)

soup = BeautifulSoup(response.text, ‘html.parser’)

dataset_list = soup.find_all(

‘a’, class_=’link-hover link text-xl font-semibold’)

if not dataset_list:

print(“No dataset links found”)

return

for dataset in dataset_list:

dataset_link = “https://archive.ics.uci.edu” + dataset[‘href’]

print(f”Scraping details for {dataset.text.strip()}…”)

dataset_details = scrape_dataset_details(dataset_link)

data.append(dataset_details)

# Loop through the pages using the pagination parameters

skip = 0

take = 10

while True:

page_url = f”https://archive.ics.uci.edu/datasets?skip={skip}&take={take}&sort=desc&orderBy=NumHits&search=”

print(f”Scraping page: {page_url}”)

initial_data_count = len(data)

scrape_datasets(page_url)

if len(

data

) == initial_data_count:

break

skip += take

with open(‘uci_datasets.csv’, ‘w’, newline=”, encoding=’utf-8′) as file:

writer = csv.writer(file)

writer.writerow(headers)

writer.writerows(data)

print(“Scraping complete. Data saved to ‘uci_datasets.csv’.”)

scrape_uci_datasets()

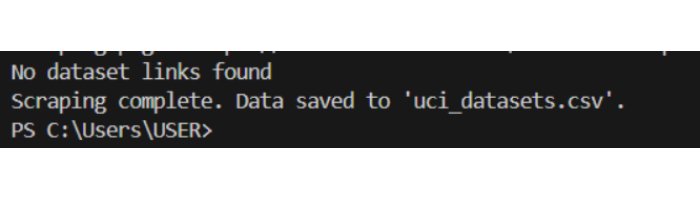

Once you run the script, it will run for a while until the terminal says “No links to dataset found”, followed by “Extraction complete”. Data saved in “uci_datasets.csv””, indicating that the recovered data was saved in a CSV file.

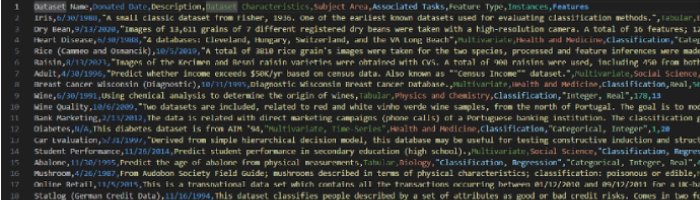

To view the recovered data, open the “uci dataset.csv” file. You should be able to see the data organized by dataset name, donation date, description, features, domain, etc.

You can have a better view of the data if you open the file via Excel.

If you follow the logic mentioned in this article, you can remove many sites. All you have to do is start from the base URL, figure out how to navigate the list, and navigate to the page dedicated to each list item. Next, identify the appropriate page elements, such as IDs and classes, where you can isolate and extract the data you want.

You also need to understand the logic behind pagination. Most of the time, pagination makes slight changes to the URL, which you can use to move from one page to another.

Finally, you can write the data to a CSV file, which is suitable for storage and as input for display.

Conclusion

Using the Python SDK with Requests and Beautiful Soup allows you to create fully functional web scrapers to extract data from websites. While this feature can be very beneficial for data-driven decision making, it is important to keep ethical and legal considerations in mind.

Once you are familiar with the methods used in this script, you can explore techniques such as proxy management and data persistence. You can also familiarize yourself with other libraries like Scrapy, Selenium, and Puppeteer to meet your data collection needs.

Thanks for reading! My name is Jess and I am a Hyperskill expert. You can check out a course for Python developers on the platform.